KAYAK’S Most Interesting A/B Test

Manage episode 272184123 series 1249851

Apptimize: Mobile Strategy, UX and Growth

This is the inaugural Apptimize podcast! Our CEO, Nancy Hua, speaks with Vinayak Ranade, Director of Engineering for Mobile at KAYAK about how to identify what to test. Listen to the podcast and/or read the transcript below.

Nancy Hua: Hi everyone! I’m Nancy, the CEO of Apptimize. I’m here with an old MIT friend, Vinayak, the Director of Engineering for Mobile at KAYAK. Vinayak’s been at KAYAK for three years leading KAYAK’s development of iOS, Android, and Windows apps. He’s had a lot of success with optimizing KAYAK’s mobile app through A/B testing. In this interview, he’s going to share some of what he’s learned with us. Welcome to the Apptimize podcast, Vinayak. Thank you for coming. What’s the most interesting A/B test examples you’ve done?

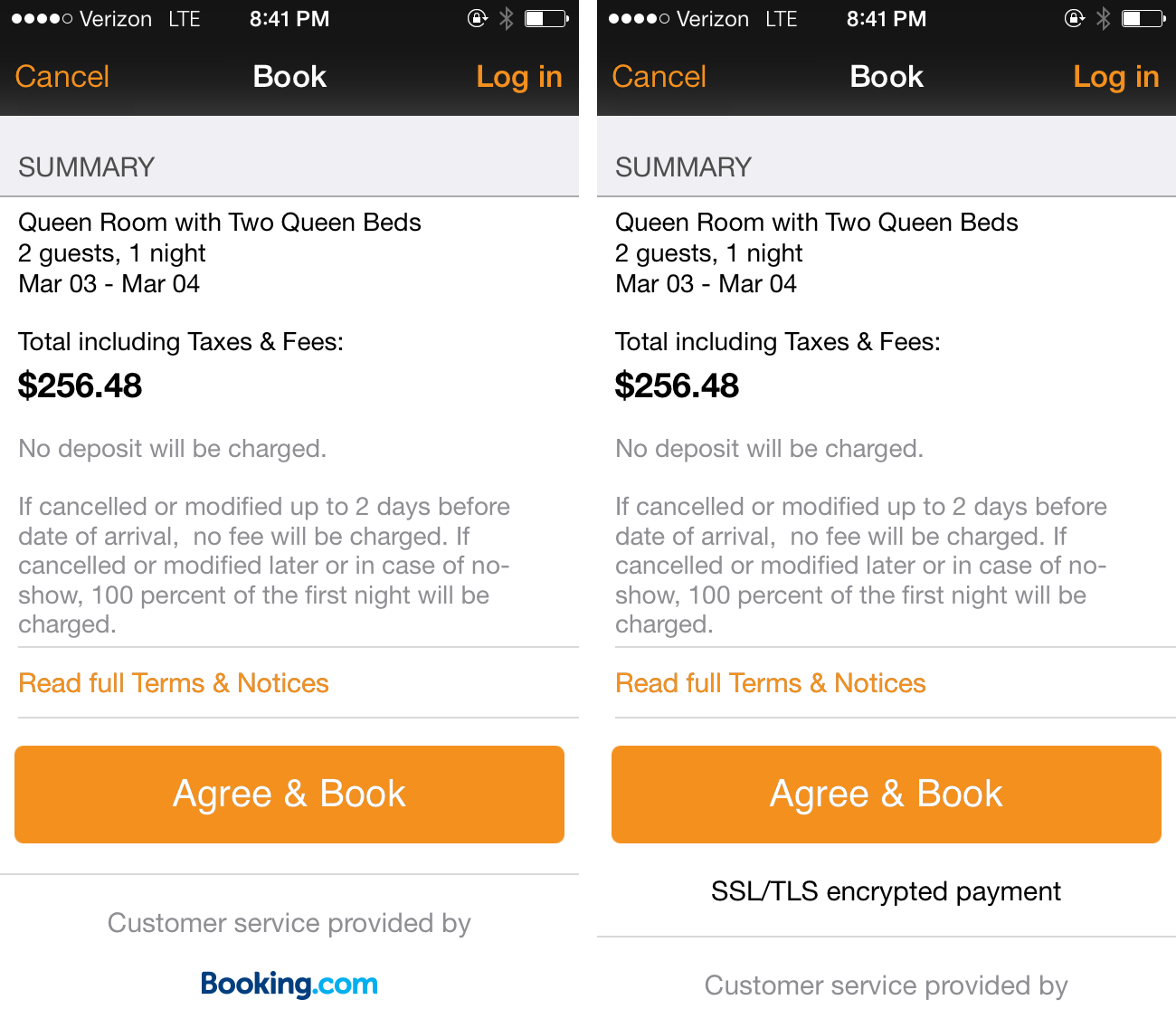

Vinayak Ranade: One of the most interesting A/B tests that we’ve done recently is something that confirmed one of our design decisions. And it was related to user security on the app. So one of the things that users are worried about today in mobile is paying for stuff. And one of the reasons they’re worried about it is because they don’t know if it’s secure or not. What if you’re going through a tunnel and your connection goes down? Or what if there’s someone who’s snooping on your wifi? For some reason people don’t seem to have the same sense of security that they have on their desktop as they do on mobile. And to be fair, they didn’t also have this security when they started out on desktops. So, to kind of help users through this process and to make them feel comfortable when they’re actually trying to buy something and charge their credit card, at KAYAK we decided to add a small message at the bottom that just reassured the user that everything’s going to be okay if they buy something. And the idea behind this was to tell the user it’s really okay if you make this payment.

There’s a different company that tried this and they actually A/B tested it, and what they found was that removing this messaging actually improved their conversions, which was very puzzling. And the theory that they had about it was that if you remove this then you are no longer reminding the user about the security issue in the first place. So their thesis was that users don’t generally think about this and if you put it there, you’re reminding them that oh, this could have been insecure. That’s why users then tend to shy away from clicking and actually spending money on your app.

So we decided to do our own experiment about this and we actually found the opposite that when we removed the messaging, people tended to book less. So this was an interesting thing for us because we expected that our original design decision may not have been the right one but it turned out to be the right one. And so this was an interesting experiment because it was related to user behavior, it was something that we weren’t super sure about but it definitely confirmed our design decisions that we made originally.

NH: That makes a lot of sense. Just because something worked for someone else doesn’t mean it will work for all apps. You have to try it out yourself.

VR: Absolutely.

NH: So with everything you could be A/B testing, how do you prioritize what you test so you can work on something that will have a big impact?

VR: So normally, our test requests come from many different sources. Sometimes they’re from executives, sometimes they’re from engineers, sometimes they’re from salespeople who are trying out an idea that a client suggested, and sometimes they’re just changes that we’re going to do and we just want to measure them. So we try to keep a balance between kind of being a little bit bold and reckless and also making small and incremental changes just to the app. Generally what happens is that one or two people, including myself, collect all of the test requests and we go through them and we try to figure out which ones make sense, which ones don’t make sense, and we try to make sure that we do at least a few tests during every client release. And generally they’re focused on a few list of features that we’re currently working on and they’re optimized. Basically three things go into which ones we choose. There’s the actual kind of bottom line impact of the company, there is the development effort involved, and then there is the “Is this experiment going to teach us something fundamentally new?”

NH: What’s an example of something you’ll A/B test vs. something you can just implement?

VR: Sure. If we’re going to do something like a big re-design, so recently we did a redesign for our iOS 7 app for iOS, and every other Apple developer knows that the day that Apple announced, “Here’s all of the tools you need, here’s the new Xcode, here’s the new SDK, and we’re shipping iOS 7 at the end of the month, so time to go,” most developers were kind of peeing their pants.

If we had spent time incrementally testing every single change we’d made and redesigned, we’d never had made it. And a lot of companies did do that and they were three months late to the game. So that was one of the situations where we decided, okay we’re just going to go for this, we’re going to trust our designers, we know they’re good, so we all kind of put everything else aside for six weeks and cranked out the redesign.

This redesign was informed by experiment results from previous UIs. We tried really hard not to make the same mistakes and to learn from our past experiences, but we didn’t actively experiment during this redesign. And after the redesign, we shipped it on time, we shipped it on release day, we got a ton of downloads, we got featured as being a new, beautiful iOS 7 app in the Apple app store, which these days in these growing app marketplaces, is huge for companies, and even companies as big as KAYAK. And then after that, we spent the next couple months testing some of the smaller stuff, like do we want to tweak the messaging here, do we want to add an extra line here or there, and kind of fine-tuning what we’d already done.

It will be interesting to see in the long run what’s going to win because the incremental experiment theory is very much like evolution where you kind of throw everything in the bag and see what survives. Right? And then there’s the intelligent design theory where you look at it from a close, holistic perspective. And you say, this is what we think is good for the user, this is what we think is good for the company on the whole, and this is the direction that we want the product to go in. And it’s probably going to hit a few speed bumps along the way, but experiments have this tendency to get you to local maxima and if you kind of combine this intelligent design technique followed by evolution, then you can kind of put yourself on the biggest tilt for climbing it, instead of just looking for the nearest hill to climb.

NH: Thank you for sharing this with us, Vinayak!

Update: While this is the end of the podcast, the full conversation was actually over an hour long. Read more about KAYAK’s testing strategies in Part II of the interview.

The post KAYAK’S Most Interesting A/B Test appeared first on Apptimize.

Un episodio